NAOBEARD

Autonomous Systems Project - Spring 2020, Tilburg University

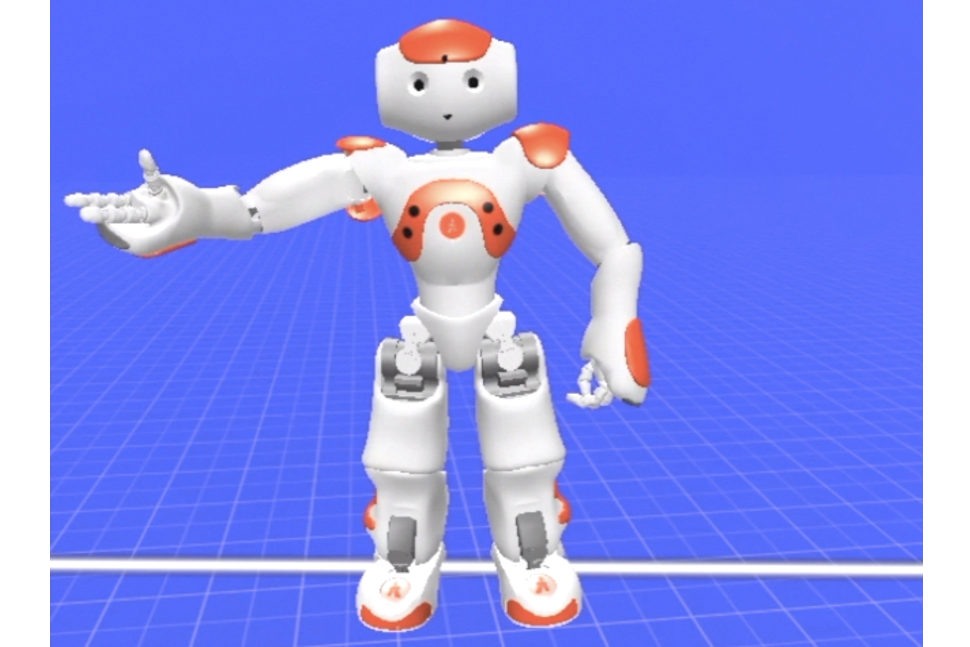

The aim of this project was to design a generic model of robot that can tell a story multimodally. Based on the findings from the previous studies and our own analyses, we designed the model and created a short story to test the model.

The model focused on synchronizing the timings of the premade gesture and the speech. It followed Kendon’s three phases - preparation, nucleus, and retraction - which are commonly used in practice for temporal segmentation of the gestures. For this project, the model used only premade gestures and was given an annotated story which tells where exactly to perform each gesture.

In the preparation phase, it counts the number of syllables of the utterance and scales the length of the gesture accordingly. To do the scaling, it multiplies the timestamps by the following weight.

The duration per syllable was set to 0.3, and the gesture duration was set to the maximum value of timestamps. By setting the minimum weight as 1, it extends the duration of the gesture but not shrinks to guarantee the minimum duration of the gesture. With the adjusted timestamps and joints, the model executes the gesture and measures how long it actually took to perform the gesture.

After the performance (retraction phase), if the actual duration of the performance took less than expected, the model waits a bit until the next gesture so that the robot has time to finish the speech. Otherwise, it waits only for a preset interval which was set to 0.7 seconds as shown in the equation below. In addition, to prevent abrupt start of the next gesture, if the syllable count is smaller than 5, which are usually one or two words, the model waits for extra 0.3 seconds.

More details about the project can be found in the report file.